'ai Is In Its Empire Era'

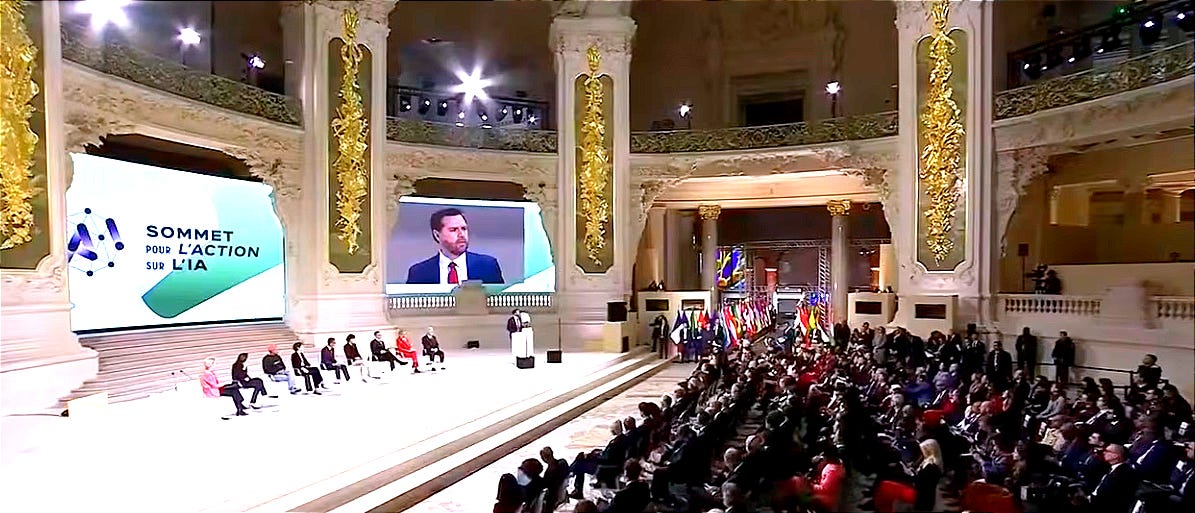

Last week, when J.D. Vance delivered his first foreign speech as vice president at an international AI summit in Paris, he took the opportunity to put forward what the New York Times called a “vision of a coming era of American technological domination.” The Times is soft pedaling what Vance really articulated: AI’s imperial age has arrived, and the US is calling the shots.

It was a dark, loaded, even threatening speech, and I’d hoped to write about it sooner, but I was interviewing federal tech workers for a piece on the chaos unfolding at home. And then you all left me a little slack-jawed and useless with all the kind words, encouragement, and support after I announced I was officially launching this newsletter as The Job. Seriously, thanks so much for all that. This is a dark time, and this is the best way I know of to pitch in. And all that support has made it clear this is a swing worth taking. I have a lot of ambitious stories in the works right now, and that backing means those really will happen. If you’d like to support this work, too, and you’re able, you can do so below. Thanks again, I’m honestly blown away.

“The Trump administration will ensure that the most powerful AI systems are built in the U.S. with American design,” Vance declared on stage in Paris. “The AI future is not going to be won by hand-wringing about safety, it will be won by building—from reliable power plants to the manufacturing facilities that can produce the chips of the future.”

It was as bellicose a speech as one could make about an industry currently best known for chatbots—American AI will reign supreme, Vance insisted, so Europe, India, and everyone else should get in line and forget about irksome regulations—and yet the diatribe has mostly gone under discussed by those outside the AI world. It was, after all, overshadowed almost immediately by Vance’s visit to Munich, where he scolded European nations over their speech and immigration laws and sat down with the far-right German party AfD.

But the speech, with its saber-rattling, its calls to end oversight and regulation, its declaration of intent to further concentrate power, and its equating of American might with industrial AI supremacy, stuck with me. It echoed what was (and is) unfolding back home, where Elon Musk and the DOGE boys are at work excitedly gutting the federal government under the auspices of an “AI-first strategy.”

And it sounded the death knell for a measured approach to AI governance by the US—or any approach to AI governance at all, really. The conference itself, which had aspired to locate an international framework for the future of AI development; well that was left in disarray, too. The US and the UK refused to sign even a non-binding declaration pledging to develop AI responsibly and sustainably.

“The AI Summit ends in rupture,” Kate Crawford—a leading AI scholar and the author of Atlas of AI, and who was in attendance in Paris—tweeted as it did. “AI accelerationists want pure expansion—more capital, energy, private infrastructure, no guard rails. Public interest camp supports labor, sustainability, shared data. safety, and oversight. The gap never looked wider. AI is in its empire era.”

AI is in its empire era. That feels indisputable to me. When Vance and the industry leaders like Sam Altman or Elon Musk or the DOGE lieutenants talk about “AI”, they are hardly talking about innovation anymore, they are talking about a project whose aim is to consolidate as much power as possible. AI is a twelve-figure, semi-fictive Stargate project in the Texas desert designed to inspire awe and further investment. AI is more data centers, more training data, more, more, more—despite non-American competitors like DeepSeek demonstrating that other routes are possible. They’re possible, but they’re not desirable, because the American approach to AI is empire-building.

I rang Crawford after the summit, to see if she might expand on her reaction, as it struck me as key to understanding this moment.

“Comparing it to the last two summits which have had a really strong focus on risk and safety, this really shifted into a different register,” Crawford told me from New York. “This was much more about, not only technological inevitability—so no questioning whether AI is useful and helpful—but really moving towards something more like a foreign investment paradigm. It’s a shift towards something which is much more accelerationist, and about maximizing profits for the private sector.”

Vance is ideally suited to making this case, both representing the relentlessly pro-business Trump administration, and his former VC circle in Silicon Valley. Recall, Vance himself has invested in AI startups. And this was what led even many in the AI world to despair over the summit’s conclusion—AI safety experts were aghast at Vance’s broad sidelining of the entire movement.

“You’re aware of the alignment problem, as it’s referred to in the AI world, which is that an individual AI system might become misaligned with human values and ethical values and intentions,” Crawford told me. “What we saw at the summit was a different type of alignment problem, which was a misalignment from very concentrated corporate power and the interests of civil society in general.”

“That’s my big concern,” she added. “That’s the real alignment problem: This enormous transfer of wealth to the AI private sector—without guardrails, and without a commitment to governance—represents a very serious problem to the world and everyone who lives in it.”

Whether consciously or not, Vance's speech posed a version of this "alignment problem," with the Trump-Vance regime proffered as its solution, naturally. criticized “hostile foreign adversaries”—read: China—that “have weaponized AI software to rewrite history, surveil users, and censor speech.”

He continued, warning the world not to partner with such “authoritarian regimes”:

I would also remind our international friends here today that partnering with such regimes never pays off in the long term. From CCTV to 5G equipment, we're all familiar with cheap tech in the marketplace that's been heavily subsidized and exported by authoritarian regimes. But as I know, and I think some of us in this room have learned from experience, partnering with them means chaining your nation to an authoritarian master that seeks to infiltrate, dig in, and seize your information infrastructure.

Of course, at the very moment Vance was uttering those words, back in the United States, Elon Musk’s Department of Government Efficiency was, often in violation of the law, infiltrating, digging in, and seizing the information infrastructure of the United States government. And DOGE set loose automated AI programs on government IT systems, removing pronouns from email signatures, wiping words from government records like “racial justice” and “transition” that the administration found to be too related to “DEI” or “wokeness”—quite literally censoring speech and rewriting history.

It probably does not really need to be said, but Vance's faux concern about AI under an "authoritarian master" is a joke. He is entirely comfortable with the overtly authoritarian uses of the technology being deployed at home, on his own countrymen. He routinely shouted down the idea that AI should be regulated or constrained from being put to such uses in any way in the very same speech. His true allegiance is to an imperial AI, one that allows those in power to concentrate more of it; whether his friends and mentors in Silicon Valley, or his allies, and his boss, in the state, which his party now controls.

“The word that he used, which I thought was particularly revealing, was ‘handwringing’,” Crawford says. “We don’t want any more handwringing, about safety and risk, this image that it’s been fretting over nothing, when what we really need is to build profitable systems. This is about, effectively, increasing corporate profits at the cost of protecting the populations that will be subject to these systems.”

As for her comment that AI had entered its empire era, she says there were two dimensions there. First, Emmanuel Macron announced a $109 billion euro infrastructure spend on AI, following the US’s lead in taking an expansionist, imperial approach to AI development. “This is about rebuilding the French empire around AI — up to this point of course it’s very much been a duopoly between the US and China,” Crawford says. “In a very literal sense, it’s a play for empire-building in France.”

In a more general way, she says, it pertains to how empires have concentrated technological power throughout history. Crawford and a colleague, Vladan Joler, recently published Calculating Empires, a project that “traces technological patterns of colonialism, militarization, automation, and enclosure since 1500” to show how technology has been centralized by empires throughout history. It winds on through today, when, according to the authors, “industrial transformations in AI are concentrating power into even fewer hands, while accelerating polarization and alienation.”

“A technology is developed and then it’s enclosed,” Crawford tells me, “so you see these big enclosures to try and keep particular technologies in very few hands, moving towards highly concentrated industrial power, or in the case of previous centuries—empire power. This very much looks like that.”

It’s all there: Silicon Valley’s takeover of DC, Vance and Trump’s ascent to power, and Musk and his team’s embrace of AI as a tool of statecraft. If Vance’s speech doesn’t officially mark the beginning of the imperial age of AI, then it’s as good an indicator as any that that’s where we’ve wound up.

“It was quite a window into where we’re at,” Crawford says, “and it reveals the playbook of what the US is going to be doing for the next few years.”